Past week:

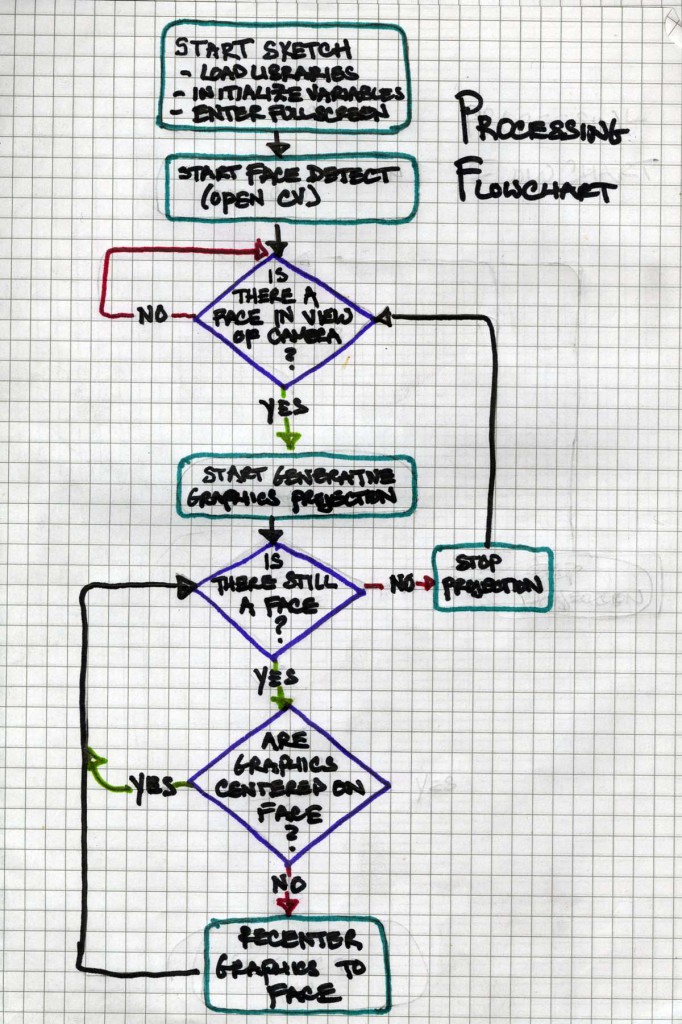

Over the break, I initially focused on implementing kinect skeleton tracking. I spent a few days working through the book Making Things See and was able to get skeleton tracking working in processing. However, I wasn’t satisfied with how well I could integrate it with the other generative elements I’ve been working on, so I eventually decided to stay with face recognition (open CV) as the primary mode of interactivity.

I further developed the generative graphics of my processing sketch, trying to visually reinforce the themes of recursion and memory – I’m not sure how successful I was in this regard, but I’m happier with the overall aesthetic. I also spent a day experimenting with how the processing sketch works as a projection in real space. This allowed me to troubleshoot the code to be more appropriate for an installation context.

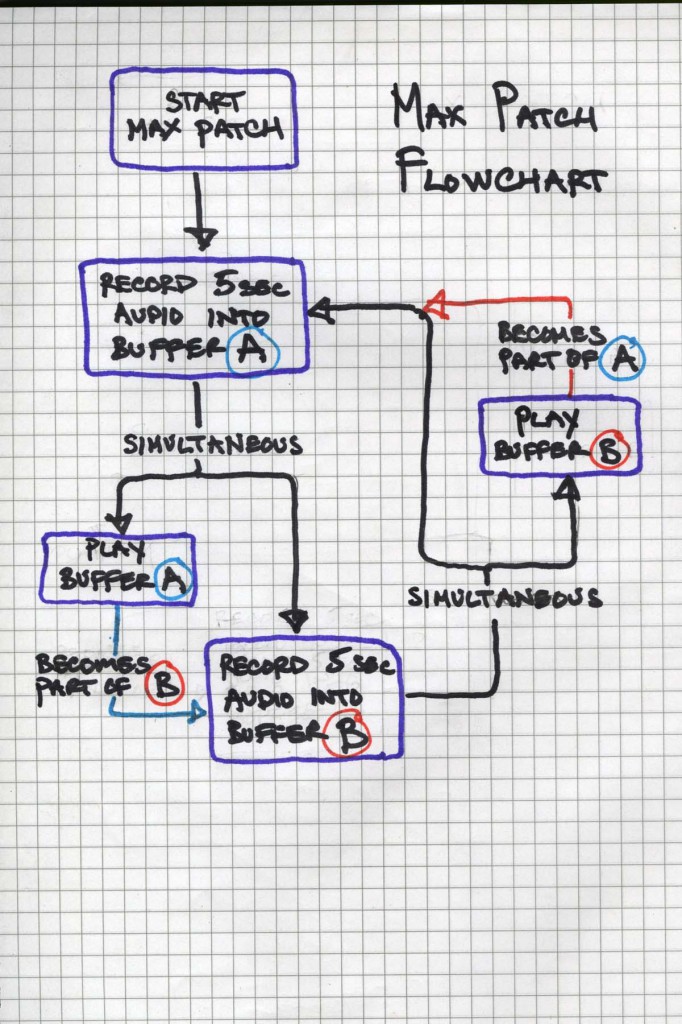

I also played around with my recursive audio max patch in the installation space. I’m happier with how this represents the original theme, though at present the audio effect it generates is subtler than I’d like. I had some trouble avoiding feedback and other unwanted audio side effects, but was able to minimize these by adjusting equipment and placement.

Next week:

I may continue experimenting with kinect skeleton tracking as I still feel this can afford more options for interactivity. Ideally, I’d like to further integrate the processing and max elements so that they communicate with each other directly and inform each others’ output. I would also like to experiment with other installation space possibilities.

Read More