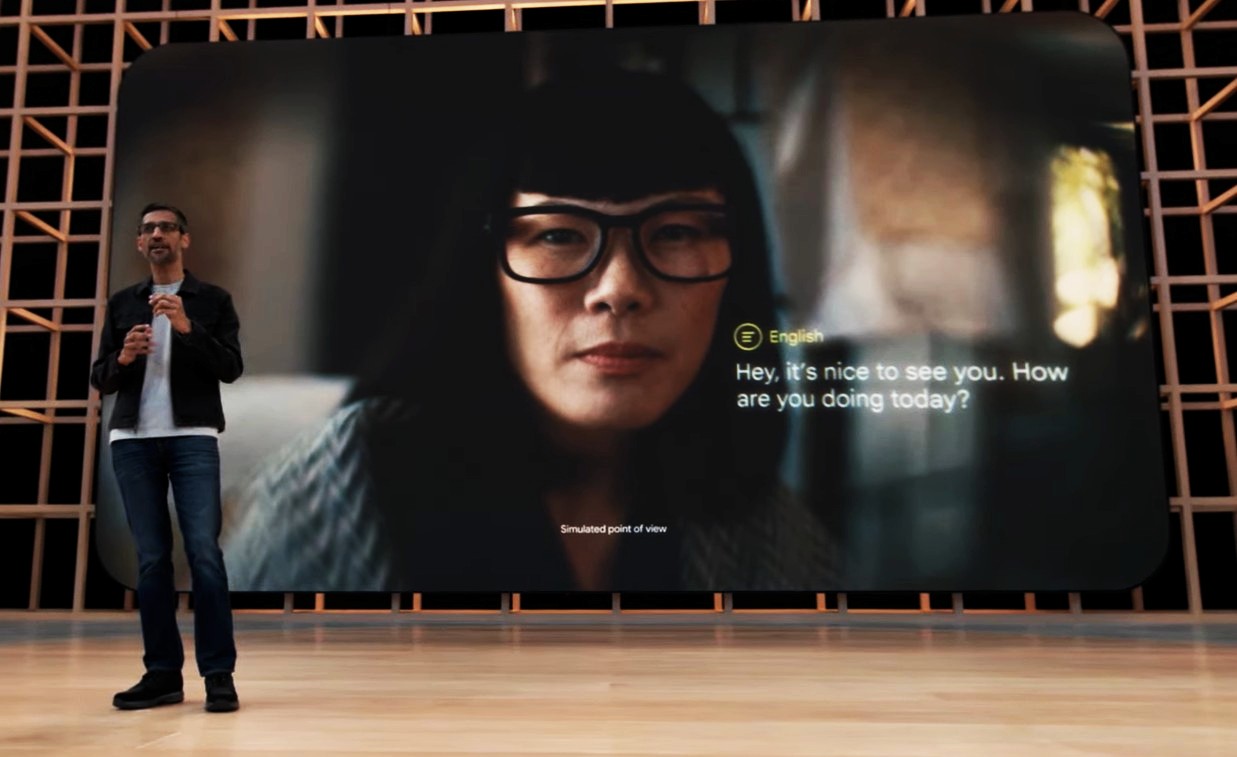

| Augmented Language: Translating Speech in Everyday Glasses |

Augmented Language was featured in the Google I/O 2022 Keynote. Live translation of speech in everyday glasses

has the potential to make language more universally accessible and understandable.

"Let's see what happens when we take our advances in translation and transcription, and deliver them in your line-of-sight in one of the early prototypes that we have been testing." (Sundar Pichai, CEO Google) |

SpeechCompass: Enhancing Mobile Captioning with Diarization and Directional Guidance via Multi-Microphone Localization

Dementyev, A., Kanevsky, D., Yang, S., Parvaix, M., Lai, C., and Olwal, A.

Proceedings of CHI 2025 - Best Paper Award (Top 1%) (ACM CHI Conference on Human Factors in Computing Systems), Yokohama, Japan, Apr 26-May 1, 2025, pp. 1-17.

CHI 2025 - Best Paper Award (Top 1%)

|

![PDF []](images/pdf.gif)

|

InstructPipe: Generating Visual Blocks Pipelines with Human Instructions and LLMs

Zhou, Z., Jin, J., Phadnis, V., Yuan, X., Jiang, J., Qian, X., Wright, K., Sherwood, M., Mayes, J., Zhou, J., Huang, Y., Xu, Z., Zhang, Y., Lee, J., Olwal, A., Kim, D., Iyengar, R., Li, N., and Du, R.

Proceedings of CHI 2025 - Best Paper Honorable Mention Award (Top 5%) (ACM CHI Conference on Human Factors in Computing Systems), Yokohama, Japan, Apr 26-May 1, 2025, pp. 1-22.

CHI 2025 - Best Paper Honorable Mention Award (Top 5%)

|

![PDF []](images/pdf.gif)

|

Wearable Subtitles: Augmenting Spoken Communication with Lightweight Eyewear for All-day Captioning

Olwal, A., Balke, K., Votintcev, D., Starner, T., Conn, P., Chinh, B., and Corda, B.

Proceedings of UIST 2020 - Best Demo Honorable Mention Award (ACM Symposium on User Interface Software and Technology), Virtual Event, Oct 20-23, 2020, pp. 1108-1120.

UIST 2020 - Best Demo Honorable Mention Award

|

![PDF [16MB]](images/pdf.gif)

|

Quantifying The Effect of Simulator-Based Data Augmentation for Speech Recognition on Augmented Reality Glasses

Arakawa, R., Parvaix, M., Lai, C., Erdogan, H., and Olwal, A.

Proceedings of ICASSP 2024 (IEEE International Conference on Acoustics, Speech and Signal Processing), Seoul, South Korea, Apr 14-19, 2024, pp. 726-730.

ICASSP 2024

|

![PDF [2.4MB]](images/pdf.gif)

|

ChatDirector: Enhancing Video Conferencing with Space-Aware Scene Rendering and Speech-Driven Layout Transition

Qian, X., Tan, F., Zhang, Y., Collins, B., Kim, B., Olwal, A., Ramani, K., and Du, R.

Proceedings of CHI 2024 (SIGCHI Conference on Human Factors in Computing Systems), Honolulu, HI, May 11-16, 2024, pp. 1-16.

CHI 2024

|

![PDF [2.3MB]](images/pdf.gif)

|

Visual Captions: Augmenting Verbal Communication with On-the-fly Visuals

Liu, X, Kirilyuk, V., Yuan, X, Olwal, A., Chi, P., Chen, X., and Du, R.

Proceedings of CHI 2023 (SIGCHI Conference on Human Factors in Computing Systems), Hamburg, Germany, Apr 23-28, 2023, pp. 1-20.

CHI 2023

|

![PDF [30MB]](images/pdf.gif)

|

Modeling and Improving Text Stability in Live Captions

Liu, B., Zhang, J., Ferrer, L., Xu, S., Bahirwani, V., Smus, B., Olwal, A., and Du, R.

CHI 2023 Extended Abstracts (SIGCHI Conference on Human Factors in Computing Systems), Hamburg, Germany, Apr 23-28, 2023, pp. 1-9.

CHI 2023

|

![PDF [3MB]](images/pdf.gif)

|

|

| Electronic Textile: Making Soft Materials Interactive with Sensors and Displays |

Hidden Interfaces create extremely bright displays that can appear and disappear in wood, textile, plastic and mirrored surfaces. User interfaces can therefore blend into natural materials and environments without any compromise to their design or aesthetics.

E-Textile Microinteractions and I/O Braid make textiles interactive. We sense the user's proximity, touch and twist, and detect gestures, such as flicks, slides, pinches, grabs and pats, using machine learning and capactive touch sensing. Fiber optics provide embedded light feedback. |

E-Textile Microinteractions: Augmenting Twist with Flick, Slide and Grasp Gestures for Soft Electronics

Olwal, A., Starner, T., and Mainini, G.

Proceedings of CHI 2020 (ACM CHI Conference on Human Factors in Computing Systems), Honolulu, HI, Apr 25-30, 2020, pp. 1-13.

CHI 2020

|

![PDF [19MB]](images/pdf.gif)

|

I/O Braid: Scalable Touch-Sensitive Lighted Cords Using Spiraling, Repeating Sensing Textiles and Fiber Optics

Olwal, A., Moeller, J., Priest-Dorman, G., Starner, T., and Carroll, B.

Proceedings of UIST 2018 - Best Demo Award (ACM Symposium on User Interface Software and Technology), Berlin, Germany, Oct 14-17, 2018, pp. 485-497.

UIST 2018 - Best Demo Award

|

![PDF [18MB]](images/pdf.gif)

|

SensorSnaps: Integrating Wireless Sensor Nodes into Fabric Snap Fasteners for Textile Interfaces

Dementyev, A., Galvez, T., and Olwal, A.

Proceedings of UIST 2019 (ACM Symposium on User Interface Software and Technology), New Orleans, LA, Oct 20-23, 2019, pp. 17-28.

UIST 2019

|

![PDF [4MB]](images/pdf.gif)

|

|

| Ubiquitous Sensing in Everyday Objects and Devices |

Haptics with Input introduces passive and active sensing for the Linear Resonant Actuator (LRA), which is widely used in wearable and mobile devices. We demonstrate new touch and pressure sensing, and how mobile devices can sense which surfaces they are placed on.

Google AI Blog: Haptics with Input -> |

Haptics with Input: Back-EMF in Linear Resonant Actuators to Enable Touch, Pressure and Environmental Awareness

Dementyev, A., Olwal, A., and Lyon, R.F.

Proceedings of UIST 2020 (ACM Symposium on User Interface Software and Technology), Virtual Event, Oct 20-23, 2020, pp. 420-429.

UIST 2020

|

![PDF [3MB]](images/pdf.gif)

|

Zensei: Embedded, Multi-electrode Bioimpedance Sensing for Implicit, Ubiquitous User Recognition

Sato, M., Puri, R., Olwal, A., Ushigome, Y., Franciszkiewicz, L., Chandra, D., Poupyrev, I., and Raskar, R.

Proceedings of CHI 2017 (SIGCHI Conference on Human Factors in Computing Systems), Denver, CO, May 6-11, 2017, pp. 3972-3985.

CHI 2017

|

![PDF [15MB]](images/pdf.gif)

|

SpecTrans: Versatile Material Classification for Interaction with Textureless, Specular and Transparent Surfaces

Sato, M., Yoshida, S., Olwal, A., Shi, B., Hiyama, A., Tanikawa, T., Hirose, M., Raskar, R.

Proceedings of CHI 2015 (SIGCHI Conference on Human Factors in Computing Systems), Seoul, South Korea, Apr 18-23, 2015, pp. 2191-2200.

CHI 2015

|

![PDF [21MB]](images/pdf.gif)

|

SpeckleSense: Fast, Precise, Low-cost and Compact Motion Sensing using Laser Speckle

Zizka, J., Olwal, A., and Raskar, R.

Proceedings of UIST 2011 (ACM Symposium on User Interface Software and Technology), Santa Barbara, CA, Oct 16-19, 2011, pp. 489-498.

UIST 2011

|

![PDF [4.5MB]](images/pdf.gif)

|

|

| Shape Displays: Spatial Interaction with Dynamic Physical Form |

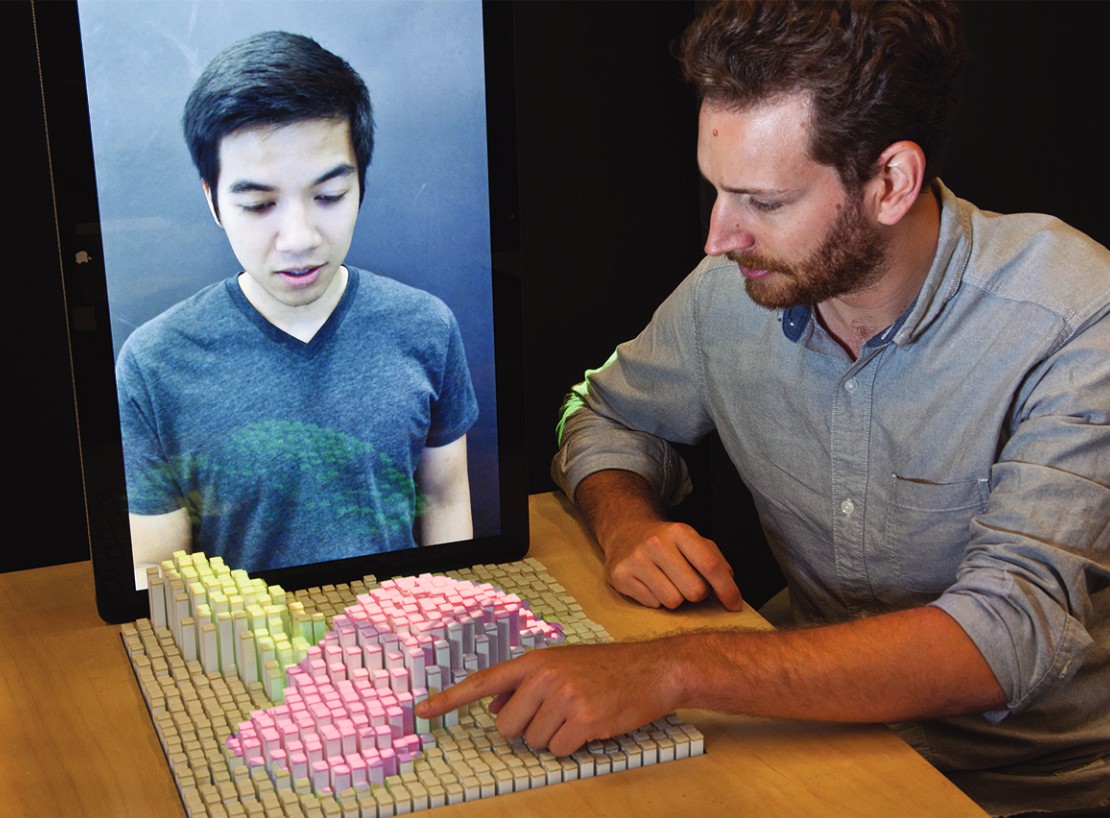

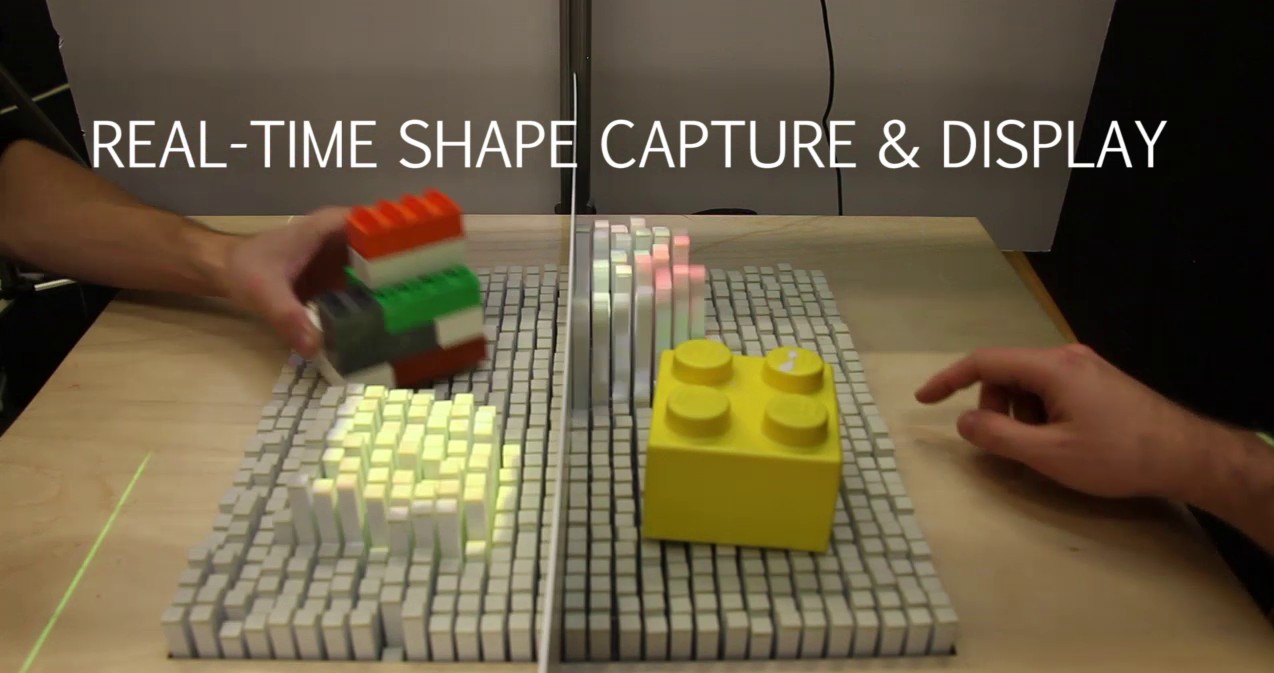

Physical Telepresence uses shape capture and display to physically enhance interactions with remote people and environments.

inFORM dynamically changes material and form to continuously adapt the physical and virtual interface to user interactions.

Sublimate explores rapid and fluid transitions between physical and visual representations of dynamic digital content.

Jamming User Interfaces enable programmable stiffness, haptic feedback and deformation, for new types of flexible and shape-changing interactions. |

Physical Telepresence: Shape Capture and Display for Embodied, Computer-mediated Remote Collaboration

Leithinger, D., Follmer, S., Olwal, A., and Ishii, H.

Proceedings of UIST 2014 (ACM Symposium on User Interface Software and Technology), Honolulu, HI, Oct 5-8, 2014, pp. 461-470.

UIST 2014

|

![PDF [5.5MB]](images/pdf.gif)

|

Shape Displays: Spatial Interaction with Dynamic Physical Form

Leithinger, D., Follmer, S., Olwal, A., and Ishii, H.

CG&A 2015 (IEEE Computer Graphics and Applications, Vol. 35, no 5), Sep -Oct , 2015, pp. 417-426.

CG&A 2015

|

![PDF [0.5MB]](images/pdf.gif)

|

inFORM: Dynamic Physical Affordances and Constraints through Shape and Object Actuation

Follmer, S., Leithinger, D., Olwal, A., Hogge, A., and Ishii, H.

Proceedings of UIST 2013 (ACM Symposium on User Interface Software and Technology), St Andrews, UK, Oct 8-11, 2013, pp. 417-426.

UIST 2013

|

![PDF [0.5MB]](images/pdf.gif)

|

Sublimate: State-Changing Virtual and Physical Rendering to Augment Interaction with Shape Displays

Leithinger, D., Follmer, S., Olwal, A., Luescher, S., Hogge, A., Lee, J., and Ishii, H.

Proceedings of CHI 2013 - Best Paper Honorable Mention Award (Top 5%) (SIGCHI Conference on Human Factors in Computing Systems), Paris, France, Apr 27-May 2, 2013, pp. 1441-1450.

CHI 2013 - Best Paper Honorable Mention Award (Top 5%)

|

![PDF [1MB]](images/pdf.gif)

|

Jamming User Interfaces: Programmable Particle Stiffness and Sensing for Malleable and Shape-Changing Devices

Follmer, S., Leithinger, D., Olwal, A., Cheng, N., and Ishii, H.

Proceedings of UIST 2012 - Best Paper Award (Top 1%) (ACM Symposium on User Interface Software and Technology), Cambridge, MA, Oct 7-10, 2012, pp. 519-528.

UIST 2012 - Best Paper Award (Top 1%)

|

![PDF [8.3MB]](images/pdf.gif)

|

|